Rulemaking and rule-taking are among the most meticulous processes in modern society.

They demand precision, context, and judgment, since even minor missteps can create far-reaching legal and societal consequences. For this reason, artificial intelligence (AI) should never fully replace human judgment in these domains.

At the same time, the authorized – and unauthorized – use of AI across organizations means that its opportunities and risks must be acknowledged and managed with care. The most responsible approach is to treat AI as a supporting tool: a capable assistant, not a decision-maker. Humans must retain ultimate accountability for drafting, interpreting, and enforcing rules.

Advancing beyond the limitations of generative AI

The first ever chatbot, ELIZA, was developed in 1966 and opened up a new future for how humans interacted with computers. Today, there are AI-powered chatbots integrated into everything from customer service to medical care.

Despite this promise, generative AI has clear limitations when applied to the complex domains of law, standards, and guidance. These models excel at producing plausible-sounding text, but they lack true understanding of context and intent, integral in any rule-based system. Gen AI can draft a contract or summarize a policy, but it cannot reliably interpret legal nuance or process the true intended purpose of rules.

Moreover, generative AI depends on precise prompts and constant human supervision—it will not take initiative beyond the instructions it receives.

Considering recent instances of AI lacking good judgement, like how Taco Bell’s AI system allowed one person to order 18,000 water cups, there are understandably calls to approach the new tech with trepidation. Especially in the creation and consumption of rules, a conservative approach ensures that the integrity of the law isn’t compromised.

As a result, the role of generative AI has been largely confined to passive support. This is useful, but insufficient for more complex tasks. That is where agentic AI differs.

Agentic AI addresses many of these shortcomings by moving beyond static output generation toward taking multiple actions. Unlike generative models that wait for human prompts, agentic systems can initiate tasks, monitor environments, adapt to new information, and work toward goals.

So how can Agentic AI be utilized in legislatures, standards, and guidance, without compromising security?

Agentic AI: Researching New Rules, Not Writing Them

Those responsible for making the rules now have a team of AI agents at their disposal. There is an optimal approach to getting the most efficiency out of this new digital labor.

The most promising use of agentic AI in legislative and regulatory work is research. Teams of AI agents can operate within a defined legal framework to gather, analyze, and organize vast amounts of information more quickly and comprehensively than human researchers alone. This includes tracking jurisdictional differences, monitoring regulatory changes, and generating structured reports.

Such AI systems don’t just produce simple answers but can draft pages upon pages of legal research. This gives human drafting and policy-making staff more time to focus on deliberation and judgement.

These AI agents are not unsimilar to savants, geniuses in specific areas, while also lacking knowledge in other areas. Much like the famous mathematician, Srinivasa Ramanujan, Agentic AI is capable of superhuman information processing but can still be wrong at times. As with any emerging technology, rigorous human oversight remains essential.

Supporting Compliance Through Safe Consumption of Rules

A whole host of organizations depend on these rules in order to operate correctly. Rules exist as rigid boundaries to operate within, and so there can be no frivolity in their implementation. That need for strict compliance does not exclude the rule-takers from reaping the benefits of integrating AI into their work. But how can both regulators and regulated industries best use agentic AI?

Consider the large library of materials, laws and guidance that these organizations must keep track of. The sheer volume of information that changes regularly and has regional variations requires constant monitoring. This is where a team of AI agents can be extremely useful.

Not only can agentic AI monitor what is changing and what is relevant, it can also create summaries of integral information. The teams responsible for monitoring the rules are assisted in their work, but are not delegating AI to do anything further with the information retrieved. The decision-making is still left to the humans.

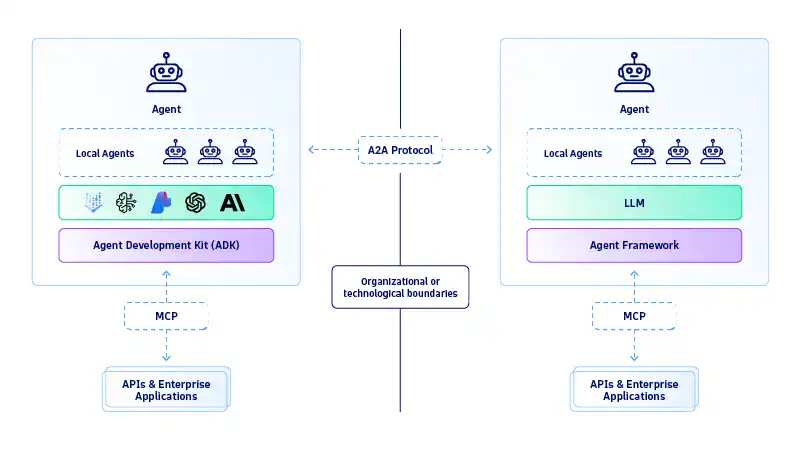

How the Model Context Protocol can enhance the agentic AI experience

Developments such as the Model Context Protocol (MCP) – an open framework for integrating large language models – are accelerating the practical deployment of agentic AI. MCP provides a structural foundation for building modular workflows where AI agents can interact with external data sources and even with one another in coordinated ways.

By embedding memory and context management directly into workflows, MCP enables AI agents to carry out meaningful tasks such as monitoring regulatory changes or cross-referencing compliance requirements, without constant human prompting. This transforms AI from an enhanced search tool into a system capable of sustained, goal-oriented support for rulemaking and compliance.

Monitoring machines to mitigate risks

As agentic AI systems take on more responsibility, transparency becomes critical. Unlike traditional software, which follows explicitly coded logic, agentic systems operate with their own dynamic reasoning processes. This creates new challenges for accountability and trust.

Robust audit trails are essential, systems that document the full chain of reasoning, data sources, tools, and contextual inputs used. Without such traceability, organizations risk deploying systems that are difficult to supervise or correct.

Deploying agentic AI to strengthen laws, standards, and guidance

Looking ahead, the integration of agentic AI into the rulemaking and ruletaking ecosystems, offers both immense promise and profound responsibility. As these systems evolve constantly, their implementation must be guided by principles of transparency and human accountability.

Used correctly, agentic AI can strengthen the processes that underpin laws, standards, and guidance, ensuring that society benefits from innovation without compromising the integrity of its rules.